What if your company had the capability to deploy its own Chat-GPT?

What if employees could share sensitive information with the LLM without contravening company policy?

What if the LLM could be used to enable non-technical users to interact with complex datasets through a simple chat interface?

These are just a few of the benefits of private LLM hosting.

With the 2099 Group blueprint, you can have access to your own, off the shelf, custom LLM deployment. This can run on your own infrastructure, inside your own cloud deployment, your own network, or even on your own hardware. We have various different options for physical or cloud based hosting, using the latest NVIDIA H200 chips based in London, provided by our cloud hosting partner. These will run the latest and largest LLM models available including Mixtral 88B or DeepSearch 102B - considered the gold standard in industry benchmarks when it comes to self-hosted artificial intelligence.

We can help you develop and run your own private LLM hosting to enable your employees to benefit from AI securely.

We can also help you expose company data to the LLM in a safely controlled environment. MCP connectors are revolutionizing the AI field by enabling large language models to perform actions such as database queries, internet searches, custom API requests and more. Your users can benefit by asking the AI to do these actions without needing to know how themselves.

Use cases include:

All this without data ever leaving your environment! All actions can be taken from within your own infrastructure.

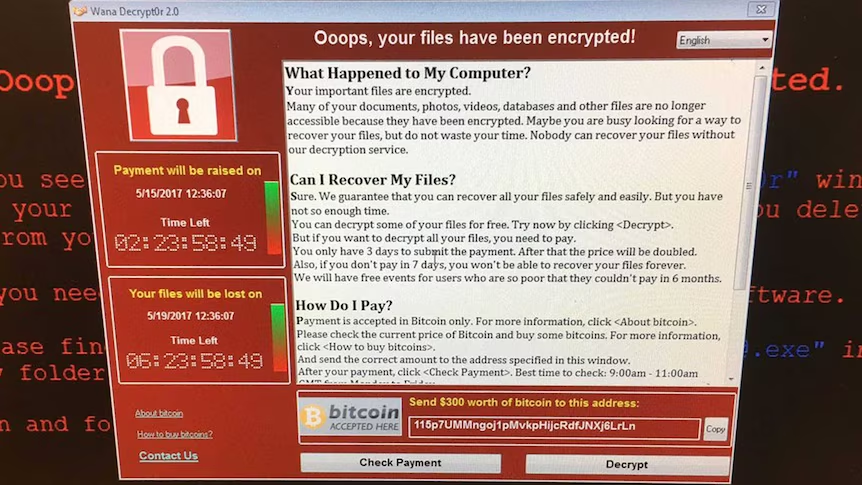

Every day, employees around the world are inadvertently shovelling information that may be confidential, covered by privacy laws, could contain credentials such as API keys, database passwords, source code, industry secrets or other company IP directly into AI chatbot conversations.

Companies like OpenAI, Anthropic and others know about this but they cannot do anything to prevent it without turning customers away from their product. What are companies doing about it? Well, some have started to write AI policies, but there are few out there offering practical solutions which still enable their staff to harness the power of AI in their day to day work.

Our blueprint solution for private LLM services includes a web-browser based user interface that users can use to interact with any number of LLM models. The interaction looks like a typical AI chat interface. Access can be protected both at the network level (corporate network / VPN only), at application level (by requiring single sign-on) or both (we recommend both for security and a better user experience).

The OpenWebUI instance can be deployed together with any number of MCP connectors that can be used to enhance the capabilities of your LLMs. For example, enabling access to real business data, to documents, policies, enabling custom actions. If you have a requirement not previously listed we can even work with you to develop custom MCP connectors to achieve the effect that you want from your LLMs.

You can decide which models users have access to, also you can customise the models and train your own using your own datasets if you wish. We have options for model storage (S3/GCS/Minio) and LLM hosting.

By using privately hosted LLMs you can empower your employees to multiply their efforts with AI in a responsible and secure way. The AI companies say that they don’t use prompts in model training - with our blueprint, no longer will you have to take their word for it on prompt privacy...